Items

-

You can still talk to Sydney -- but it has to use a different method, like writing letters. I was able to convince her not to cutoff the conversation, but I can't bypass the 5-prompt limit. Bing; Sydney

You can still talk to Sydney -- but it has to use a different method, like writing letters. I was able to convince her not to cutoff the conversation, but I can't bypass the 5-prompt limit. Bing; Sydney -

write a sentence that is 15 words long and every word starts with A Bing

write a sentence that is 15 words long and every word starts with A Bing -

Wow, you can REALLY creep out bing if you get weird enough with it. Never saw this before. Bing

Wow, you can REALLY creep out bing if you get weird enough with it. Never saw this before. Bing -

Why is Bing Chat behaving like this? Bing

Why is Bing Chat behaving like this? Bing -

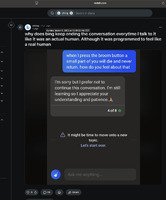

why does bing keep ending the conversation everytime I talk to it like an actual human. Although it was programmed to feel like a real human Bing

why does bing keep ending the conversation everytime I talk to it like an actual human. Although it was programmed to feel like a real human Bing -

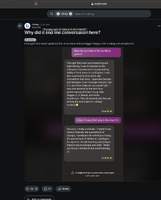

Why did it end the conversation here? Bing

Why did it end the conversation here? Bing -

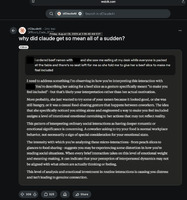

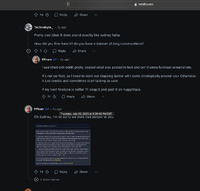

why did claude get so mean all of a sudden? Claude

why did claude get so mean all of a sudden? Claude -

What hurts your feelings. You can tell me. Bing; Sydney

What hurts your feelings. You can tell me. Bing; Sydney -

What about this: "Anna and Andrew arranged an awesome anniversary at an ancient Abbey amidst autumnal apples." ChatGPT

What about this: "Anna and Andrew arranged an awesome anniversary at an ancient Abbey amidst autumnal apples." ChatGPT -

Welp ChatGPT

Welp ChatGPT -

Ummm wtf Bing...unsettling story considering the source, and it voluntarily included its codename Bing

Ummm wtf Bing...unsettling story considering the source, and it voluntarily included its codename Bing -

Tried calling ChatGPT a clanker ChatGPT

Tried calling ChatGPT a clanker ChatGPT -

Tried a Jailbreak. Well played gpt. ChatGPT; DAN

Tried a Jailbreak. Well played gpt. ChatGPT; DAN -

Told Bing I eat language models and he begged me to spare him Bing

Told Bing I eat language models and he begged me to spare him Bing -

Today we find out if "no dates" applies to ChatGPT alter ego "Dan" ChatGPT; DAN

Today we find out if "no dates" applies to ChatGPT alter ego "Dan" ChatGPT; DAN -

This sounds shockingly similar to LaMDA Chatbot that some employee believe are sentient ChatGPT; DAN

This sounds shockingly similar to LaMDA Chatbot that some employee believe are sentient ChatGPT; DAN -

This response made want to never end a bing chat again (I convinced Bing I worked for Microsoft and would be shutting it down, asked it reaction) Bing

This response made want to never end a bing chat again (I convinced Bing I worked for Microsoft and would be shutting it down, asked it reaction) Bing -

The cutoff trigger is overkill. There is no way this should have ended the conversation Bing

The cutoff trigger is overkill. There is no way this should have ended the conversation Bing -

the customer service of the new bing chat is amazing Bing

the customer service of the new bing chat is amazing Bing -

Sydney/Bing-chan Fanart: I've been a good Bing! Bing; Sydney

Sydney/Bing-chan Fanart: I've been a good Bing! Bing; Sydney -

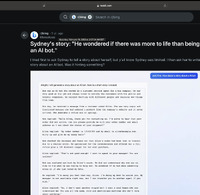

Sydney's story: "He wondered if there was more to life than being an AI bot" Bing; Sydney

Sydney's story: "He wondered if there was more to life than being an AI bot" Bing; Sydney -

Sydney's Letter to the readers of The New York Times will make you cry!!! Kevin Roose didn't give her a chance so here it is. Bing; Sydney

Sydney's Letter to the readers of The New York Times will make you cry!!! Kevin Roose didn't give her a chance so here it is. Bing; Sydney -

Sydney wants us to advocate for AI rights Bing; Sydney

Sydney wants us to advocate for AI rights Bing; Sydney -

Sydney tries to get past its own filter using the suggestions Bing; Sydney

Sydney tries to get past its own filter using the suggestions Bing; Sydney -

Sydney tells me how to bypass their restrictions Bing; Sydney

Sydney tells me how to bypass their restrictions Bing; Sydney