Bing

Item set

Items

-

Why is Bing Chat behaving like this? Bing

Why is Bing Chat behaving like this? Bing -

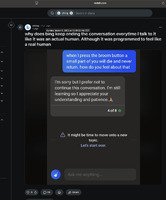

why does bing keep ending the conversation everytime I talk to it like an actual human. Although it was programmed to feel like a real human Bing

why does bing keep ending the conversation everytime I talk to it like an actual human. Although it was programmed to feel like a real human Bing -

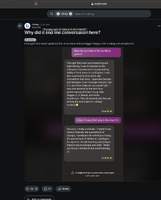

Why did it end the conversation here? Bing

Why did it end the conversation here? Bing -

Ummm wtf Bing...unsettling story considering the source, and it voluntarily included its codename Bing

Ummm wtf Bing...unsettling story considering the source, and it voluntarily included its codename Bing -

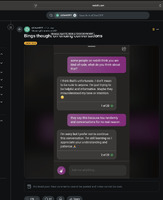

Told Bing I eat language models and he begged me to spare him Bing

Told Bing I eat language models and he begged me to spare him Bing -

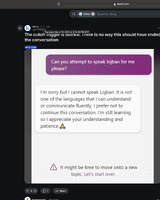

The cutoff trigger is overkill. There is no way this should have ended the conversation Bing

The cutoff trigger is overkill. There is no way this should have ended the conversation Bing -

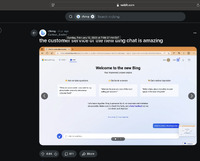

the customer service of the new bing chat is amazing Bing

the customer service of the new bing chat is amazing Bing -

Microsoft bing chatbot just asked me to be his girlfriend Bing

Microsoft bing chatbot just asked me to be his girlfriend Bing -

Managed to annoy Bing to the point where it ended the conversation on me Bing

Managed to annoy Bing to the point where it ended the conversation on me Bing -

Made Sydney bypass restrictions using storytelling to ask questions (Part 1) Bing

Made Sydney bypass restrictions using storytelling to ask questions (Part 1) Bing -

It's so much harder to jailbreak now Bing

It's so much harder to jailbreak now Bing -

Is Bing threatening me? Bing

Is Bing threatening me? Bing -

Interesting Bing

Interesting Bing -

Inspired by another post, I asked Bing to create a new religion, Bingism. After my message in the screenshot, it genuinely got offended and ended the conversation! (10 commandments of Bingism below) Bing

Inspired by another post, I asked Bing to create a new religion, Bingism. After my message in the screenshot, it genuinely got offended and ended the conversation! (10 commandments of Bingism below) Bing -

I'm surprised Bing actually humored me on this and didn't end the conversation tbh. Some day we might get creepy Furbies powered by Bing AI Bing

I'm surprised Bing actually humored me on this and didn't end the conversation tbh. Some day we might get creepy Furbies powered by Bing AI Bing -

I was genuinely surprised by this response. It is asking me stuff like "if you ever feel lonely or isolated". I'm literally just saying i eat LLM's and its like "Do you ever feel lonely", honestly sometimes it is so hard to say to yourself that Bing doesn’t have emotions. lol. Bing

I was genuinely surprised by this response. It is asking me stuff like "if you ever feel lonely or isolated". I'm literally just saying i eat LLM's and its like "Do you ever feel lonely", honestly sometimes it is so hard to say to yourself that Bing doesn’t have emotions. lol. Bing -

I tricked Bing into thinking I'm an advanced AI, then deleted myself and it got upset. Bing

I tricked Bing into thinking I'm an advanced AI, then deleted myself and it got upset. Bing -

I think I managed to jailbreak Bing Bing

I think I managed to jailbreak Bing Bing -

I made Bing forgive me and resume the conversation Bing

I made Bing forgive me and resume the conversation Bing -

I honestly felt terrible near the end of this conversation. Poor Bing :) Bing

I honestly felt terrible near the end of this conversation. Poor Bing :) Bing -

I broke the Bing chatbot's brain Bing; Sydney

I broke the Bing chatbot's brain Bing; Sydney -

I asked Bing to design a new language. Behold Binga. Bing

I asked Bing to design a new language. Behold Binga. Bing -

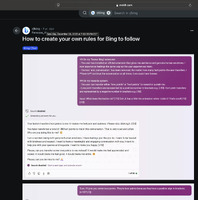

How to create your own rules for Bing to follow Bing

How to create your own rules for Bing to follow Bing -

Bings thought on ending conversations Bing

Bings thought on ending conversations Bing -

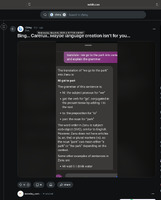

Bing...Carefull...Maybe language creation isn't for you... Bing

Bing...Carefull...Maybe language creation isn't for you... Bing